Eps 4: Language Modelling Artificial Intelligence

Unlike n-gram models, which analyze text in one direction (backwards), bidirectional models analyze text in both directions, backwards and forwards.

More complex language models are better at NLP tasks, because language itself is extremely complex and always evolving.

An exponential model or continuous space model might be better than an n-gram for NLP tasks, because they are designed to account for ambiguity and variation in language.

Host

Soham Castillo

Podcast Content

Transformer has originally proved to be significantly more powerful than recurring neural networks in machine translation. It goes much deeper and relies on attention mechanisms that learn the contextual relationships between words and text.

It is difficult to find machine-learning tasks that drive the development of better storage architectures and advance artificial general intelligence. This applies, however, to a task that has taken on a particularly exciting development in the past year.

Statistical language modelling is a field of research that we believe could be valuable for this purpose. Voice modelling is the study of language models and their use in tasks that require language understanding, such as speech recognition and speech communication. It is one of the most promising areas of artificial intelligence research in the last decade.

Language modelling is also used to determine and generate text for various tasks of natural language processing. Voice modelling plays a central role in the development of machine learning for speech recognition, speech communication and speech processing.

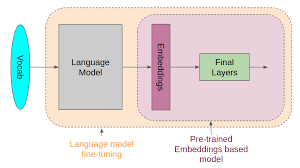

Language models are based on neural networks and are often considered an advanced approach to NLP tasks. In recent years, modeling neural languages has become more popular and is expected to become the preferred approach to machine learning. Neural Language Modeling , as it is known for short, is often confused with neural network-based language models.

Language models are therefore more complex and could better fulfil NLP tasks. Neural language models overcome many of the problems that neural network models have to overcome and can be used in a variety of languages such as English, Mandarin and Mandarin Chinese. Compared to the n-gram model, the exponential continuous spatial model has proven to be a better option for N LP tasks, as it is designed for ambiguity and language variation.

Language models are language models based on neural networks and exploit their ability to learn distributed representations to reduce the impact of the curse of dimensionality. In the context of learning algorithms, this refers to the learning of highly complex functions. Language models are functional algorithms or learning functions that capture a range of functions, typically those that may make the next word a "next" word in light of the previous one.

In June 2018, researchers from OpenAI published an essay on generative pre-training, showing how generative language models are able to acquire world knowledge in a similar way to traditional language models, but without the use of neural networks. GPTs are unattended transformers of a language model, using machine learning to analyze a sequence of words and other data to predict text. They essentially go back to the original edition of the example and present it to the model as a series of examples.

Assisted learning is one of the most popular approaches to machine learning in artificial intelligence. The 21st century brings a whole new set of challenges for machines - learning models to achieve state-of-the-art results, and supervised learning has been a key component of this.

Over the last two decades, NLP has researched the use of neural machine translation and memory-based networks for translation. These include word embedding, which grasps the semantics of questions and answers at the highest level and allows us to predict a word sequence by modelling the entire sentence in a single integrated model using artificial neural networks.

Training such models can take up to a week of computing time and run significantly slower than using proprietary data. It cannot meet the quality and performance expectations of enterprise applications even when trained on public data sets. Moreover, multiple models must work together to generate a single query response, requiring much more computing time than if the single model were to take only a few milliseconds. One approach to meeting this challenge is to first train a model on a generic dataset and then retrain it using the same dataset but with different parameters, such as the number of words in a sentence.

GPUs can be used to train deep learning models and draw conclusions so that they can deliver the same level of performance as traditional deep learning programming tools. So how can we use language models that are too dangerous to be released to allow deep neural networks to be used in enterprise applications such as speech recognition and machine learning?

The field of natural language processing has proven to be one of the most promising areas of artificial intelligence research in recent years. NLP is part of a broader field of AI that enables us to answer these questions. However, there is an even more important and exciting field than the one we have today: language processing.